10 Best Machine Learning Courses for 2024: Scikit-learn, TensorFlow, and more

Demystify the math behind Machine Learning and master popular machine learning libraries like TensorFlow and scikit-learn with these FREE and paid courses.

Are you a developer keen on building machine learning models for your business? A newbie aspiring to dive into the AI hype? Or perhaps a non-techie curious about the buzz surrounding AI?

Wherever your interests lie, we’ve got the right course for you — from the comprehensive, math-intensive courses that explain machine learning from ground zero, to the swift and straightforward courses that quickly familiarize you with popular machine learning libraries.

Learn from the best free and premium courses offered by leading universities (MIT, Stanford, University of London) and renowned institutions (Google, Microsoft, Kaggle, DataCamp) in this Best Courses Guide (BCG).

Click on the shortcuts for more details:

- Top Picks

- What is Machine Learning?

- Why Learn Machine Learning?

- BCG Stats

- Notable Machine Learning YouTubers to Follow

- Additional Machine Learning Resources

- BCG Methodology

Here are my top picks. Click on one to skip to the course details:

What is Machine Learning?

The very first definition of Machine Learning, coined in 1959 by the pioneering father Arthur Samuel, is as follows: “[the] field of study that gives computers the ability to learn without being explicitly programmed”.

Okay, definitions are boring. Let me give an analogy: think of machine learning like teaching a toddler how to walk.

At first, the toddler doesn’t know how to walk. They start by observing others walking around them. They try to stand up, take a step, and often fall. But every time they fall, they learn something new — maybe they need to move their foot a certain way, or keep their balance.

This is similar to how machine learning algorithms learn. They start with no knowledge. We feed them data (like the toddler observing people walk), and they make predictions based on that data. At first, these predictions may not be accurate (like the toddler falling). But with every mistake, they adjust their parameters slightly (like the toddler learning to balance better), and over time, they get better at making accurate predictions (like the toddler learning to walk).

Although many machine learning algorithms share the ability to learn from experience with humans, deep learning, a subfield of machine learning, goes a step further by emulating the structure and functionality of the human brain through artificial neural networks. As such, they are used for more ‘creative’ tasks, like image recognition, natural language processing, and speech recognition. If you’d want a deeper dive into neural networks, our Deep Learning BCG has courses suitable for both machine learning beginners and experts.

Why Learn Machine Learning?

The pervasiveness of machine learning should already be apparent with the meteoric rise and widespread popularity of ChatGPT and other Large Language Models (LLMs). Among other use cases of machine learning are recommendation systems like YouTube’s, self-driving cars like Tesla’s, and detecting fraudulent transactions in banking systems.

Studies conducted by LinkedIn, Gartner, Statista, Fortune Business Insights, World Economic Forum, and US Bureau of Labor Statistics, all point towards the same trend: the demand for AI and machine learning specialists will only continue to grow skywards in the coming decade. And that demand is reflected in the salaries offered for these positions, with the average machine learning engineer making between $116,918 to $161,621 according to various salary aggregator websites.

Even if you’re not aiming for a career in machine learning, other high-demand roles may require experience with it, such as data scientists and business analysts.

Disclaimer: if you’re interested in gathering insights from data using machine learning instead of machine learning itself, then you’re (likely) in the wrong place. Click here instead → Data Science BCG.

BCG Stats

- Eight of the courses are free or free-to-audit, while two are paid.

- Of all the programming-related courses, only ZeroToMastery’s course requires no prior knowledge of programming.

- All the programming-related courses use Python, but Microsoft’s course also offers an R alternative to their exercises.

Best Rigorous University-Level Specialization (DeepLearning.AI and Stanford University)

| Reasons to Take |

|

| Reasons to Avoid |

|

Andrew Ng’s specialization is the course for Machine Learning, striking the best balance between theory and application.

This free-to-audit specialization is the successor to the most popular course of all time, which, over its 11-year lifespan, had amassed an impressive 4.8 million learners. But does it truly live up to its predecessor?

The answer is a resounding yes! Garnering an exceptional average rating of 4.9 stars, coupled with glowing reviews and testimonials from across YouTube and Reddit, this specialization is a surefire choice for the motivated.

What sets it apart from the crowd? Andrew Ng takes a bottom-up approach, where you’ll learn the theory first and code the models from scratch. He emphasizes the importance of grasping the intuition behind how these models function, rather than merely gluing code together.

You’ll have the opportunity to implement basic machine learning models and deep learning neural networks using industry-standard libraries like numpy, TensorFlow, and scikit-learn.

I strongly recommend that you invest in the paid version of the specialization (or apply for financial aid). This will grant you access to autograded quizzes that test your conceptual comprehension, as well as programming labs that mirror real-world challenges and projects. Alternatively, you can audit each course in the specialization individually for free, but you’ll miss out on the graded exercises.

A word of caution: this course involves stomaching some math and Python coding. If you feel the need to brush up on your math skills before enrolling, take a look at my review of the best math course for ML. Additionally, the DeepLearning.AI community forum is a valuable resource, offering a network of mentors and fellow learners to consult when you encounter difficulties.

| Institution | DeepLearning.AI and Stanford University |

| Provider | Coursera |

| Instructors | Andrew Ng, Aarti Bagul, Eddy Shyu and Geoff Ladwig |

| Prerequisites | Basic coding knowledge and high-school level math |

| Workload | 50–100 hours |

| Enrollments | 316K |

| Rating | 4.9 / 5.0 (18K) |

| Cost | Free-to-Audit |

| Exercises | Quizzes and Labs |

| Certificate | Paid |

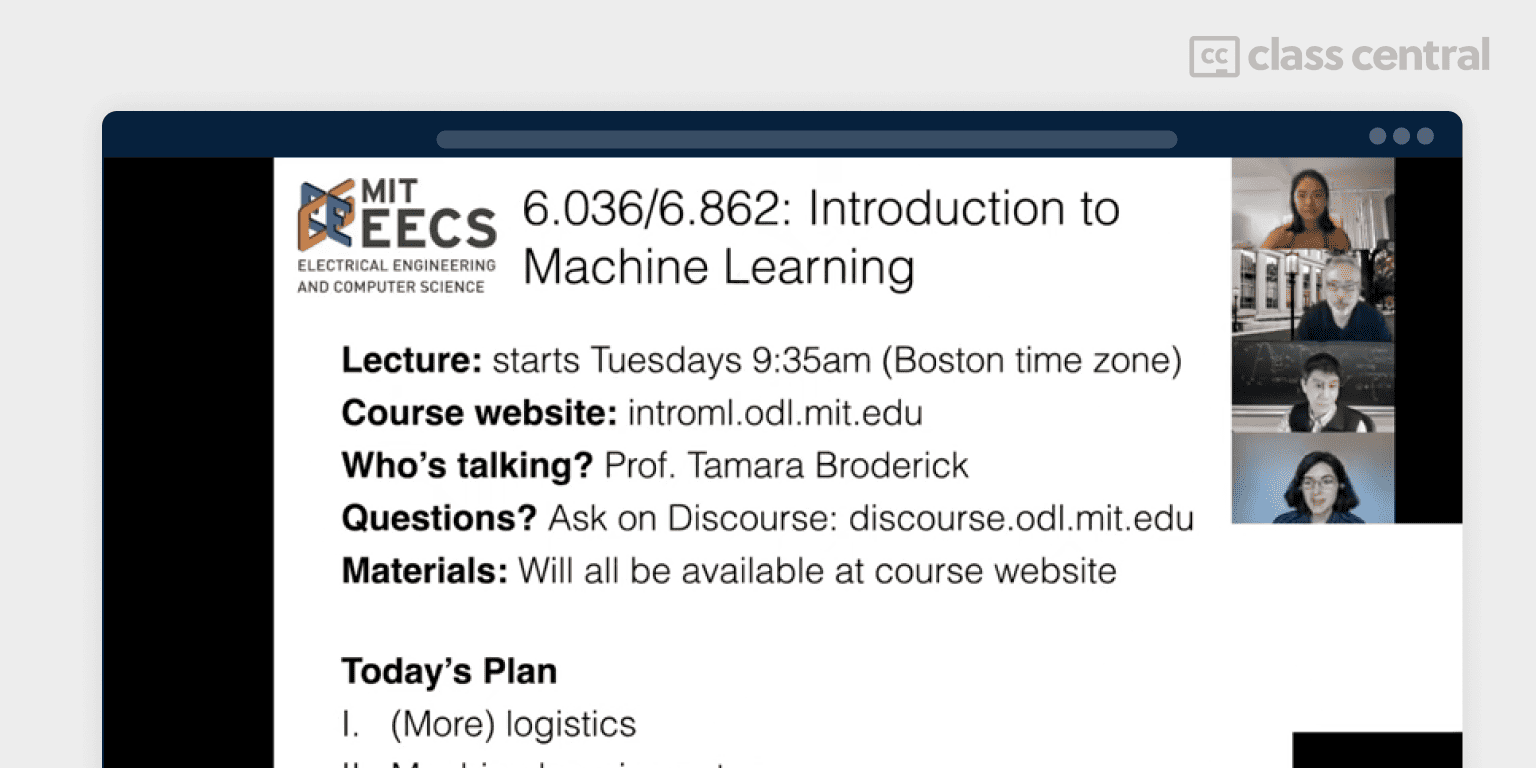

Best Free Rigorous University-Level Course (MIT)

| Reasons to Take |

|

| Reasons to Avoid |

|

If you want a completely free alternative to Andrew Ng’s course, the only one that matches it in both mathematical depth and breadth is MIT’s Introduction to Machine Learning. And by completely free, I mean you’ll have full access to not only the video lectures and reading materials, but also the autograded in-browser programming exercises, labs, and quizzes.

The big difference between this MIT course and Andrew Ng’s course is that this course focuses more on the mathematics of machine learning and deep learning. Prof. Leslie Kaelbing guides you through the process of deriving algorithms, understanding the intuition behind them, and then implementing them from scratch in Python — all without the crutch of a machine learning library.

Thus, this course is perfect for anyone who wants a thorough understanding of machine learning practices, but less so for those who are looking for a quick, practical introduction to the field. But well, that’s to be expected of an MIT course.

Don’t let my warning mislead you into thinking this course is all theory and no application though. You’ll also learn the dos-and-don’ts of training machine learning models, you’ll see how these seemingly abstract concepts solve difficult real-world problems, such as recommending products based on a customer’s purchase history and recognizing text in images.

Even with prior experience with Python, basic linear algebra, and multivariate calculus, expect to invest time and effort on the lectures and exercises. Some of the topics you’ll learn are:

- Linear Classifiers and the Perceptron algorithm

- Feature Representation

- Logistic Regression and Gradient Descent

- Neural Networks and making them work effectively

- Convolutional Neural Networks for image recognition

- Sequential Models for handling sequential data

- Reinforcement Learning for decision-making

- Recurrent Neural Networks for sequential data

- Recommender Systems for personalized recommendations

- Decision Trees and Nearest Neighbors algorithms

| Institution | Massachusetts Institute of Technology |

| Provider | Mit Open Learning Library |

| Instructor | Leslie Kaelbing |

| Prerequisites | Computer programming (python); Calculus; Linear Algebra |

| Workload | >100 hours |

| Cost | Free |

| Exercises | Quizzes and Labs |

| Certificate | None |

Best Free Shortest Hands-On Course (Kaggle)

| Reasons to Take |

|

| Reasons to Avoid |

|

Learn the core concepts of machine learning and build your first models in this 3-hour long Kaggle course. Yes, that Kaggle which hosts international machine learning competitions.

If you’re confident in your Python skills and want to straight away get into developing and training machine learning models, this course is the perfect course for you.

Why? Because you’ll learn hands-on exclusively through the Jupyter notebooks hosted online. You’ll first be given a code example with explanations on what it is doing. Then, you’re tasked to complete the coding exercises afterwards. Simple but effective, isn’t it?

Each lesson is accompanied by a short less-than-ten-minutes long video lecture, which are optional to watch.

As for the contents of the course, you’ll study the classical machine learning algorithms, decision trees and random forests, to help predict unknown data from known outcomes. You’ll also understand how to measure the performance of your model, and avoid underfitting or overfitting for better performance. And since Kaggle is the platform for machine learning competitions, you’ll learn how to participate in one as well.

| Institution | Kaggle |

| Instructors | Dan Becker |

| Prerequisites | Python |

| Workload | 3 hours |

| Cost | Free |

| Exercises | Jupyter Notebooks |

| Certificate | Free |

Best Free Tensorflow Course (Google)

| Reasons to Take |

|

| Reasons to Avoid |

|

Machine Learning Crash Course with TensorFlow APIs by Google is a free fast-paced introduction for complete newbies to machine learning (and some deep learning).

You’ll learn through a mix of video lectures and articles with interactive examples, helpful diagrams, and real-world case studies. And it’s all easy to digest too, thanks to the short length of its articles and after-chapter MCQ quizzes to help you recall what you’ve read.

There are’s also Playground Exercises which help you simulate training a model, and I find it really handy to see how changing parameters or training data percentage affects the output quality.

By the end of the course, you’ll be familiar with best practices when training machine learning models, as well as the types of machine learning algorithms and neural networks. Additionally, you’ll be able to identify and evaluate bias.

Is this course too shallow for you, or perhaps you’d prefer watching video lectures instead? Read my TensorFlow BCG BCG TensorFlow for alternatives and other goodies.

| Institution | |

| Prerequisites | Basic experience with Python and high-school level linear algebra and statistics |

| Workload | 15 hours |

| Cost | Free |

| Exercises | Google Colab notebooks |

| Certificate | None |

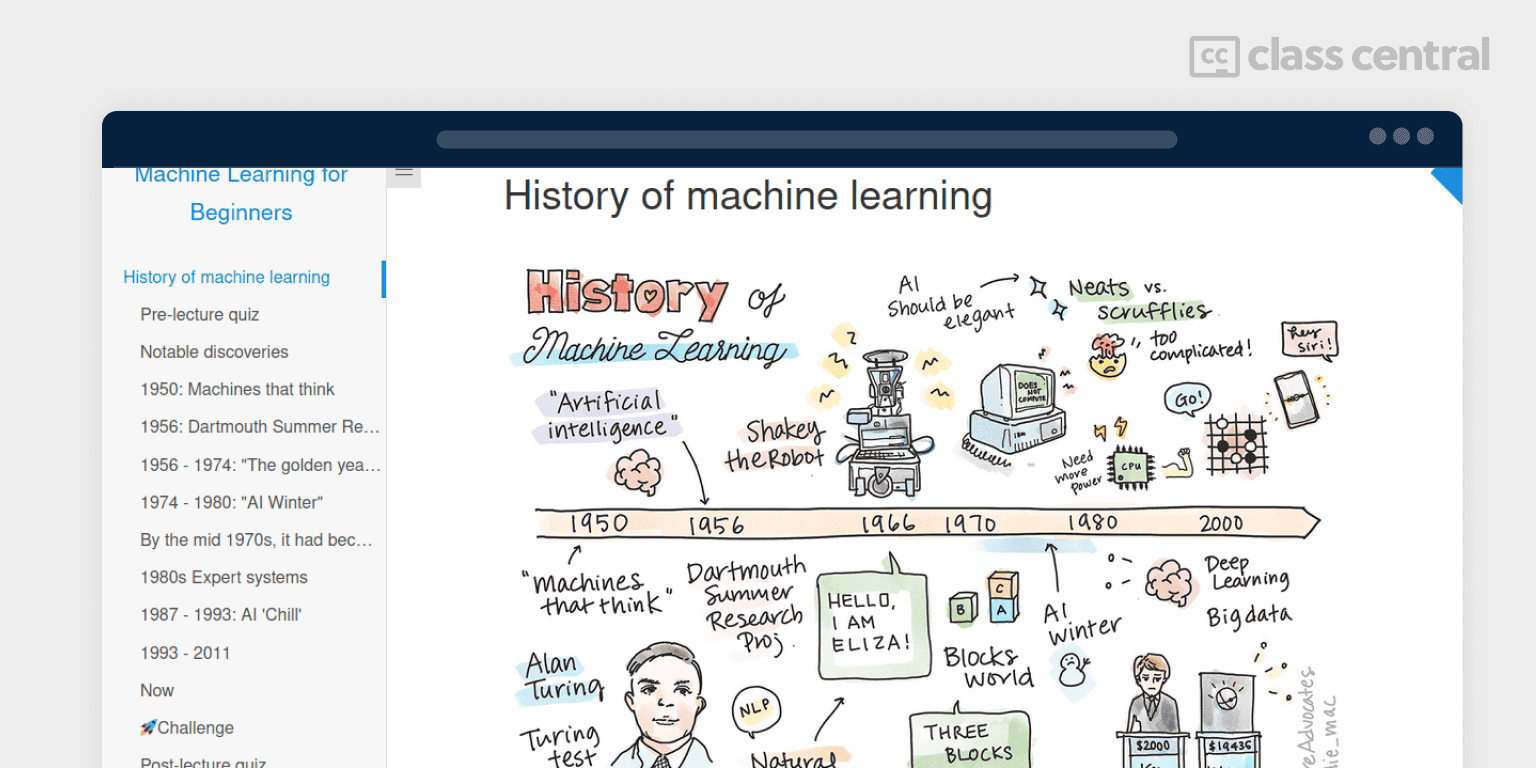

Best Free Gentle Project-Based Course (Microsoft)

| Reasons to Take |

|

| Reasons to Avoid |

|

Not all introductory courses to Machine Learning require a college education in mathematics. And they don’t have to be drowse-inducingly technical either, as demonstrated in this free course from Microsoft.

In this 12-weeks-long course, you’ll study and survey the vast evergreen fields of machine learning, from humble topics like regression, classification, and clustering, to more advanced applications like natural language processing, time series forecasting, and reinforcement learning.

How can you be proficient in building machine learning models without having the mathematical knowledge behind them? Easy: you’ll develop intuition through engaging hands-on learning. And to keep things simple, it avoids any and all deep learning shenanigans.

Machine Learning for Beginners has 26 lessons all together, with visualizations and real-world examples to help digest the content, pre- and post-lessons quizzes to help retain what you’ve learned, and supplemental video lectures and walkthroughs to further enhance your understanding. And to keep things interesting, each new machine learning topic is themed with a different culture to give you the feeling of exploration. These can be seen in the projects and exercises (with solutions) that can be completed in either Python or R, whichever suits your fancy.

| Institution | Microsoft |

| Provider | GitHub |

| Prerequisites | Basic experience with Python or R |

| Workload | 12 weeks |

| Forks | 13K |

| Stars | 64K |

| Cost | Free |

| Exercises | Quizzes and Labs |

| Certificate | None |

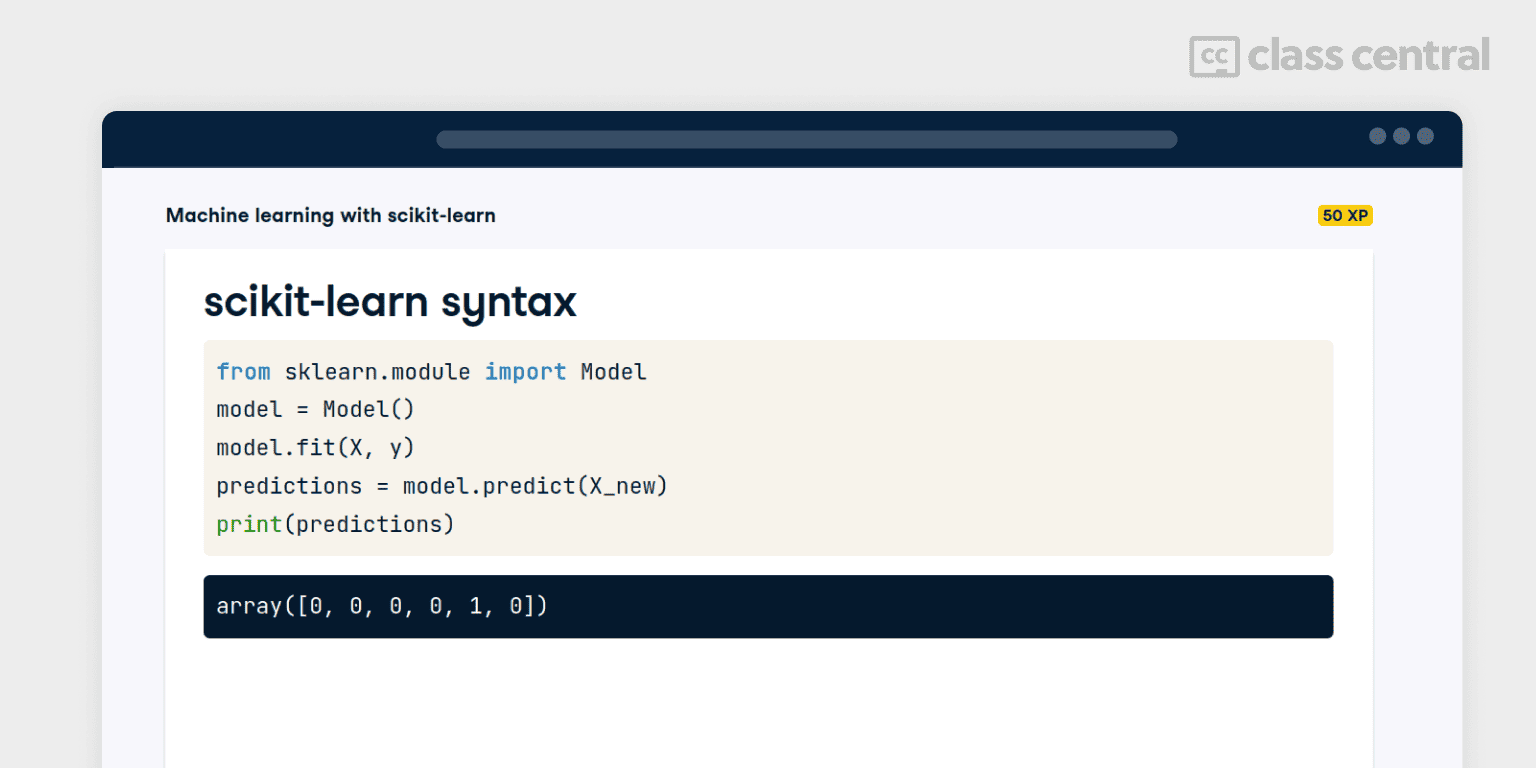

Best Paid Structured Learning Path (DataCamp)

| Reasons to Take |

|

| Reasons to Avoid |

|

Do you want a comprehensive education of machine learning, in theory and practice, from start to scratch? That’s what you’ll get from DataCamp’s paid career track to help you land a job as a machine learning scientist!

This course will teach you the tools needed to perform supervised, unsupervised, and deep learning. By the end of the course, you’ll be able to process data for features, train your models, assess performance, and tune parameters for better performance. Furthermore, you’ll also learn how to handle large datasets with tools like Spark, understand the use cases of machine learning in fields like natural language processing and image processing, and compete in Kaggle competitions.

One thing I like about DataCamp is that it’s hands-on. After each lesson, the course forces you to apply what you’ve learned by completing a coding exercise or MCQ. Not to mention, everything is done in the browser too, making your learning experience seamless.

DataCamp has two other career tracks related to machine learning: Machine Learning Scientist with R, an alternative version of this course using the R programming language, and Machine Learning Engineer, which teaches you MLOps (model deployment, operations, monitoring, and maintenance). You should take the latter after finishing this course.

| Institution | DataCamp |

| Instructor | George Boorman et al |

| Prerequisites | Python |

| Workload | 93 hours |

| Cost | Paid subscription |

| Exercises | Quizzes and Labs |

| Certificate | Paid |

Best Free Hands-On YouTube Workshop (Jovian)

| Reasons to Take |

|

| Reasons to Avoid |

|

Experience the entire machine learning workflow, from building models, to training them, to deploying to the cloud in this free 18-hour long YouTube workshop.

You’ll learn exclusively by coding and solving the tasks given in the online Jupyter notebooks, with Aakash explaining things along the way. Thus, this course is extremely hands-on, and the problems given are based on the real world too. All you need to do this course is an internet connection, basic knowledge of Python, and some high school-level statistics.

As for the libraries you’ll cover in the course, well, the name Machine Learning with Python and scikit-Learn should have already clued you in; it’s scikit-learn all the way down, with a sprinkle of numpy, pandas and matplotlib. By the end of the course, you’ll be able to handle any machine learning project while following best practices, whether it involves linear and logistic regression, or tree-based models like decision trees and gradient-boosting machines, or even unsupervised learning.

| Channel | freeCodeCamp |

| Instructor | Aakash N S (from Jovian) |

| Prerequisites | Basic knowledge of Python and statistics |

| Workload | 18 hours |

| Views | 122K |

| Likes | 4.1K |

| Cost | Free |

| Exercises | Interactive Jupyter Notebooks |

| Certificate | None |

Best Free Course for Non-Experts (University of London)

| Reasons to Take |

|

| Reasons to Avoid |

|

Machine Learning for All by the University of London aims to make machine learning accessible to everyone, regardless of math or programming background.

What makes this course stand out from other non-technical courses is that you’ll actually experience what it’s like to do machine learning with an interactive project, from collecting data, to training a model, and finally testing its performance. That’s good news for you if you’re interested in pursuing a machine learning career, or for your technical peers, if you want to step in their shoes and understand what’s possible and what’s not.

To any learners auditing the course, rejoice as this project and other practice quizzes are accessible to you. What’s not free are the peer reviews and quizzes needed to earn a grade for certification.

By the end of this course, you’ll be able to:

- Understand the basics of how modern machine learning technologies work and what problems they solve

- Explain and predict how data affects the results of machine learning

- Gain practical experience training a machine learning model through a no-code interactive platform

- Contemplate the potential opportunities and risks of machine learning to society.

| Institution | University of London |

| Provider | Coursera |

| Instructor | Marco Gillies |

| Prerequisites | None |

| Workload | 21 hours |

| Enrollments | 160K |

| Rating | 4.7 / 5.0 (3.3K) |

| Cost | Free-to-audit |

| Exercises | Quizzes, discussion prompts, project, and peer review |

| Certificate | Paid |

Best Free Specialization for Applied Math (DeepLearning.AI)

| Reasons to Take |

|

| Reasons to Avoid |

|

Are you interested in grasping the intricacies of machine learning algorithms, but get dizzy when terms like “determinants”, “derivatives”, or “probability distributions” get thrown around as casually as a game of frisbee?

Mathematics for Machine Learning and Data Science will take your rudimentary high school math knowledge to a level where you can comfortably sit down and read through most machine learning papers, coffee in hand.

Rather than dredging through dense textbooks, this specialization makes math approachable by making use of short and to-the-point video lectures filled with easy-to-understand examples that you can find in the real world. There are also interactive graphing tools to help you visualize equations.

And to make sure the knowledge sticks to your brain, you’ll have quizzes for theory and labs where you’ll put these mathematical concepts to action by writing code. However, do note that most of these are graded and by extension are only available to certificate-takers (psst, auditors can find student-completed exercises on GitHub).

Three courses make up the specialization, all of which can be audited individually for free, and the topics they cover are:

- Linear Algebra: vectors, matrices, linear transformations, systems of equations, determinants)

- Calculus: optimization, derivatives, maximizing/minimizing function)

- Statistics and Probability: P values, hypothesis testing, probability distributions, maximum likelihood estimation)

On a side note, University of London also has their own Math for Machine Learning specialization. Why did I prefer DeepLearning.AI’s over theirs? First, this course covers statistics, which is more fundamental to ML than Principal Component Analysis. Second, the discussion forum is much more active, with mentors to assist you if you get stuck. Third, it’s more recent.

| Institution | DeepLearning.AI |

| Provider | Coursera |

| Instructor | Anshuman Singh, Elena Sanina, Luis Serrano and Magdalena Bouza |

| Prerequisites | High school level mathematics and beginner understanding of machine learning |

| Workload | 65 hours |

| Enrollments | 54K |

| Rating | 4.6 / 5.0 (1.3K) |

| Cost | Free-to-audit |

| Exercises | Graded quizzes and labs |

| Certificate | Paid |

Best Paid Course for Complete Programming Beginners (ZeroToMastery)

| Reasons to Take |

|

| Reasons to Avoid |

|

Don’t know lick about programming, but want to learn both Python and Machine Learning in one go?

You’re an ambitious one, and it just so happens that I have the right course for you: meet Complete Machine Learning & Data Science Bootcamp 2023.

As the course name suggests, it doesn’t solely focus on machine learning. So try to consider the data science related topics as an added bonus.

ZeroToMastery aims to bring you up to speed with the tools, methods, and mindset of a machine learning professional. Starting from zero knowledge of programming, by the end of the course you’ll be able to write Python code to explore and visualize data, and then extrapolate insights from it using machine learning and deep learning. You’ll build many projects throughout the course to help you get hands-on experience and boost your resume, with interesting projects like Bulldozer Price Predictor and Dog Breed Image Classifier — all in Google Colab notebooks.

The course also introduces auxiliary skills you definitely should have as a machine learning professional, such as competing in Kaggle competitions, presenting your work to non-techies, contributing to open source projects, and popular tools.

| Organization | Zero To Mastery |

| Provider | Udemy |

| Instructor | Andrei Neagoie and Daniel Bourke |

| Prerequisites | None |

| Workload | 44 hours |

| Enrollments | 113K |

| Rating | 4.6 / 5.0 (20K) |

| Cost | Paid |

| Exercises | Interactive coding exercises, notebooks, and capstone projects |

| Certificate | Paid |

Next steps:

Notable Machine Learning YouTubers to Follow

- Sentdex a.k.a Harrison Kinsley: Very prolific educational YouTuber with over 1.2K videos uploaded. His interests are very wide, but most of his courses and tutorials feature AI, Machine Learning, and Deep Learning with Python.

Additional Machine Learning Resources

- 3Blue1Brown’s Math Courses (Free): 3B1B is probably the most well-known math educator on YouTube, thanks to his ridiculously beautiful animations in his lectures that help you visualize and build intuition behind many abstract concepts of linear algebra, calculus, and neural networks. I highly recommend you check these out.

- Machine Learning Roadmap by Daniel Bourke (Free): Two-and-a-half hour long video breaking down all the topics necessary for a successful machine learning career. Watch to discover what to learn next.

- An Introduction to Statistical Learning (Free): The bible of machine learning, a staple read for those serious in learning the fundamentals. Math-heavy with programming exercises available in Python and R.

Best Courses Guide (BCG) Methodology

I built this ranking following the now tried-and-tested methodology used in previous BCGs (you can find them all here). It involves a three-step process:

- Research: I started by leveraging Class Central’s database with 200K online courses and 200K+ reviews. Then, I made a preliminary selection of 1800+ Machine Learning courses by rating, reviews, and bookmarks.

- Evaluate: I read through reviews on Class Central, Reddit, and course providers to understand what other learners thought about each course and combined it with my own experience as a learner.

- Select: Well-made courses were picked if they presented valuable and engaging content, fit a set of criteria and be ranked according to comprehensive curriculum, affordability, release date, ratings and enrollments.

Tags

BorderGuard

That Columbia University edX course on Machine Learning is probably the WORST. I have taken every one on the web. I would say the Analytics course edX from MIT for practical machine learning is good, though hasn’t been updated in years. The University of Washington series on Coursera is better than Ng’s course though not as comprehensive in the subject areas covered. Areas they do cover are done much better than Ng.

Trang Le

Thanks for your input. I agree that the Analytics course by MIT is excellent and I highly recommend it for anyone in any field.

I don’t quite get the fuss about Coursera. Having done a few courses on that website, I find most of the courses to lack depth. If a course asks you to spend only a few hours every week, then the outcomes you’ll get are just that.

patta chitta

wow that us really helpful for each and every students. any way thanks again

Antonello

This list misses the new MITx “Machine Learning with Python-From Linear Models to Deep Learning” one : https://www.edx.org/course/machine-learning-with-python-from-linear-models-to

Hard, very hard (for me).. 4-5 days/week full time…..

Muvaffak GOZAYDIN

David

I know Dhawal Shah since 2012 . He has done a great job .

This last one, IO have not see before . Shame on me .

Wonderful presentation Very helpful .

After the heading ” Competition ”

You should have name of the course at least be bold . As it is it seems they are worthless . But are not .